Streaming Data From A File To Power BI Streaming Dataset Using Python

Create streaming dataset based on an existing file using Python. Super handy for POCs.

Streaming Dataset

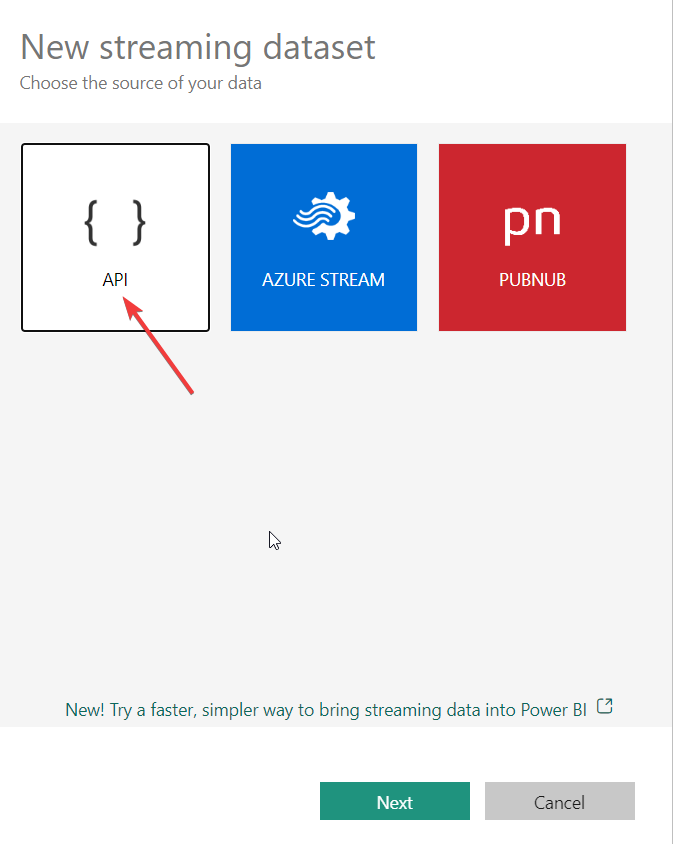

It's easy to create a streaming dataset in Power BI for real-time applications. You do not need a premium capacity. To keep the blog short, I am going to assume you already know what is a streaming dataset and how it differs from import/DQ. If you are not familiar, this documentation will help.

Imagine you are creating a POC and would like to develop the real-time dashboard, report or just want to show to your stakeholders what the solution would loook like, you will need data to stream to Power BI service. You can create Azure Event Hub, Streaming Analytics. But in this blog, I will show you how you can stream data from an existing file. I will also include a link below in the resources for a few sample datasets you can use depending on the use case.

You will need a Python IDE with pandas installed.

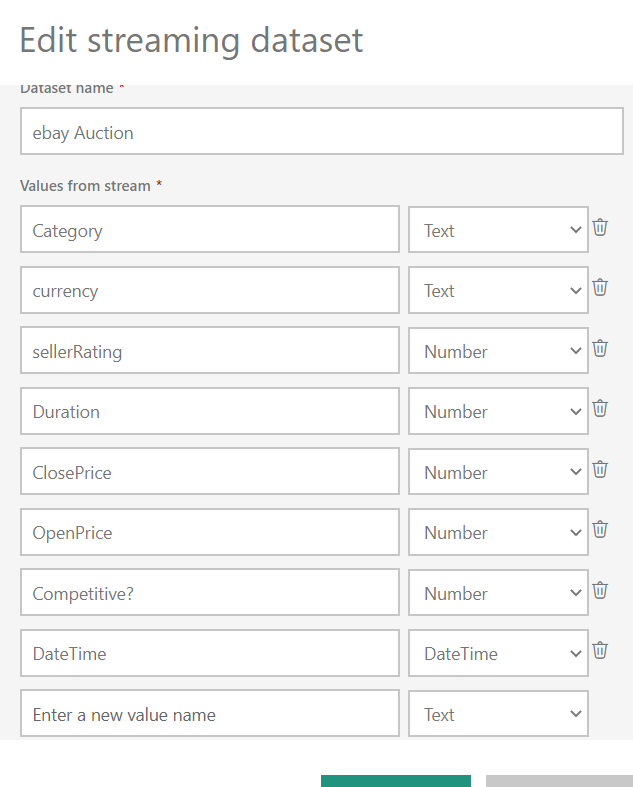

I will be using this dataset for my example. Note the columns and column types from your data and create the dataset in Power BI service accordingly.

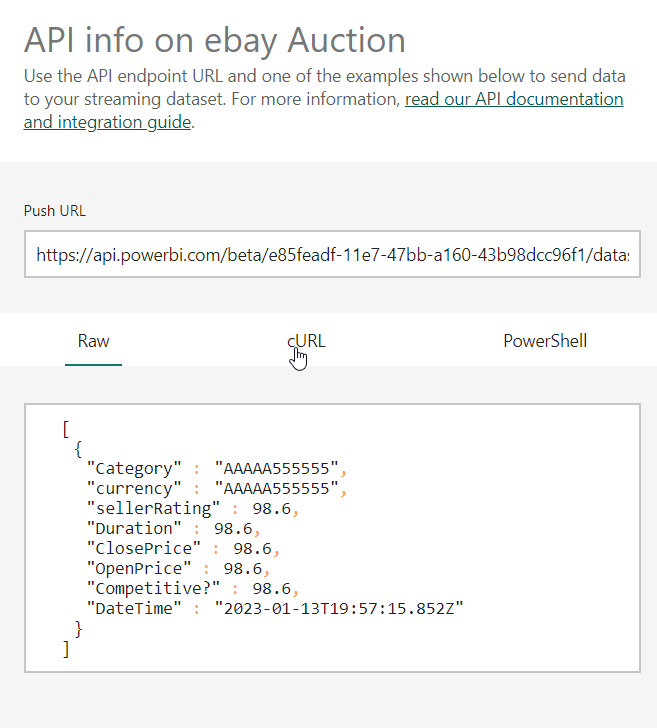

Note the API endpoint:

That's it. We are now ready to stream the data to Power BI service.

import requests

from datetime import datetime

from dataclasses import dataclass

import numpy as np

from typing import List, Dict

from time import sleep

import pandas as pd

@dataclass

class StreamToPowerBI:

"""

Class to stream data to Power BI Streaming dataset

--------------------------------------------------

Example:

- endpoint : API ednpoint, e.g. "https://api.powerbi.com/beta/<ws_id>/datasets/<dataset_id>/rows?key=<___key___>"

- data : Data in dictionary e.g. {"id":1, "Value":2.33, "Color":"Red"}

Number of columns, column names and column type must match with Power BI.

- delay : Delay in seconds in sending the data to Power BI. e.g. if delay is 3, data will be sent every 3 seconds.

default value is 2 seconds. To overwrite, use the wait() method

example: To stream data to the specified endpoint with 5 s delay

StreamToPowerBI(endpoint,data).wait(5).post_data()

"""

endpoint: str

data: Dict

delay: int=2

def wait(self, other=None):

''' Delay in seconds to stream the data '''

if other==None:

# default value is 2 seconds

other = 2

sleep(other)

return self

def post_data(self):

''' Use post method send the data to Power BI'''

if isinstance(self.data, dict):

endpoint = self.endpoint

payload = [self.data]

x = requests.post(endpoint, json = payload)

if x.status_code==200:

x=x

else:

print("Failed ! Check endpoint, data")

else:

print("Data passed is not a dictionary. It should be in the format {}".format('{}'))

return x

df = (pd.read_csv("https://raw.githubusercontent.com/reisanar/datasets/master/eBayAuctions.csv",

index_col=None,

dtype={'Category':str,

'currency':str,

'sellerRating':float,

'Duration':float,

'ClosePrice':float,

'OpenPrice':float,

'Competitive?':float},

usecols=['Category','currency','sellerRating','Duration','ClosePrice','OpenPrice','Competitive?'])

).dropna()

Before streaming data from the whole file, let's first test using one data point. Note that in the orginal data, there is no timestamp, so I am adding the timestamp , i.e DateTime column, to the dictionary. In your case, you may not want to do that. comment it out if you want to stream the data as is.

d = dict(df.iloc[0])

d['DateTime']=datetime.now().isoformat()

d

endpoint = "https://api.powerbi.com/beta/xxxxxxx/datasets/xxxxxxxxxx"

StreamToPowerBI(endpoint,d).wait(1).post_data()

If the reponse returned is <Response [200]>, it was successful. We can now stream more data.

for record in range(0,len(df)):

data = dict(df.iloc[record])

#including datetime is optional. If you do want it, make sure it's included in the streaming dataset defintion in Power BI service

data['DateTime']=datetime.now().isoformat()

StreamToPowerBI(endpoint,data).wait(1).post_data()

x = 0.1

for record in range(0,len(df.sample(frac=x))):

data = dict(df.iloc[record])

#including datetime is optional. If you do want it, make sure it's included in the streaming dataset defintion in Power BI service

data['DateTime']=datetime.now().isoformat()

StreamToPowerBI(endpoint,data).wait(1).post_data()

Resources

- Documentation : https://learn.microsoft.com/en-us/power-bi/connect-data/service-real-time-streaming

- Example datasets: https://github.com/reisanar/datasets

- Azure Open Datasets : https://github.com/Azure/OpenDatasetsNotebooks/